How to Build an Amazon Web Crawler With Python In 2025 for Effective Scraping?

Mar 07

Introduction

In the rapidly advancing world of digital commerce, the Amazon Web Crawler With Python In 2025 has emerged as a crucial asset for businesses aiming to gain competitive intelligence and market insights. With the continuous expansion of e-commerce, the capability to efficiently extract and analyze product data is more than just a convenience—it is a powerful tool that can offer businesses a substantial strategic advantage in an increasingly data-driven marketplace.

Introduction to Web Scraping in 2025

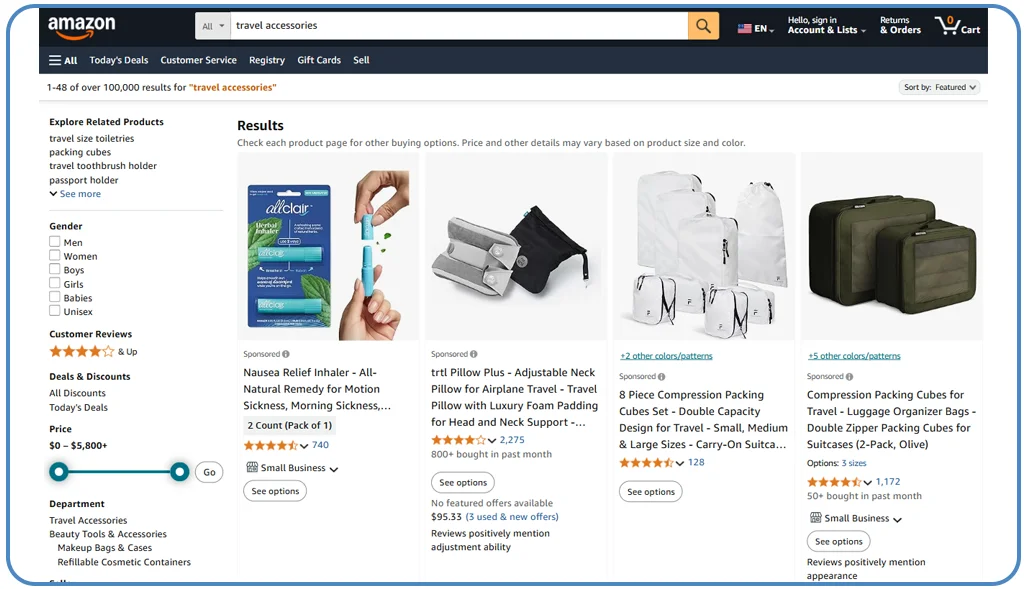

As the digital landscape continues to evolve in 2025, the need for sophisticated Amazon Web Crawler techniques has become more critical than ever. Traditional data collection methods are no longer sufficient due to the growing complexity of website structures and the implementation of advanced anti-scraping measures.

To develop a robust Amazon Data Scraping solution, businesses and developers must adopt a strategic approach that leverages the latest technological advancements while ensuring efficiency, accuracy, and compliance with evolving web regulations.

Understanding Web Scraping for E-commerce

Python Web Scraping has transformed how businesses extract and utilize online data for strategic decision-making. By automating data collection, companies can efficiently monitor competitor activities, identify emerging market trends, and optimize pricing strategies. This technology eliminates manual research, ensuring accurate and real-time insights that drive business growth.

An Amazon Product Scraper is essential for e-commerce businesses seeking a competitive edge. It enables seamless data extraction from Amazon’s vast marketplace, helping businesses track product prices, analyze consumer demand, and evaluate competitor offerings. With automated and structured data retrieval, companies can make informed decisions that enhance their sales strategies and improve overall market positioning.

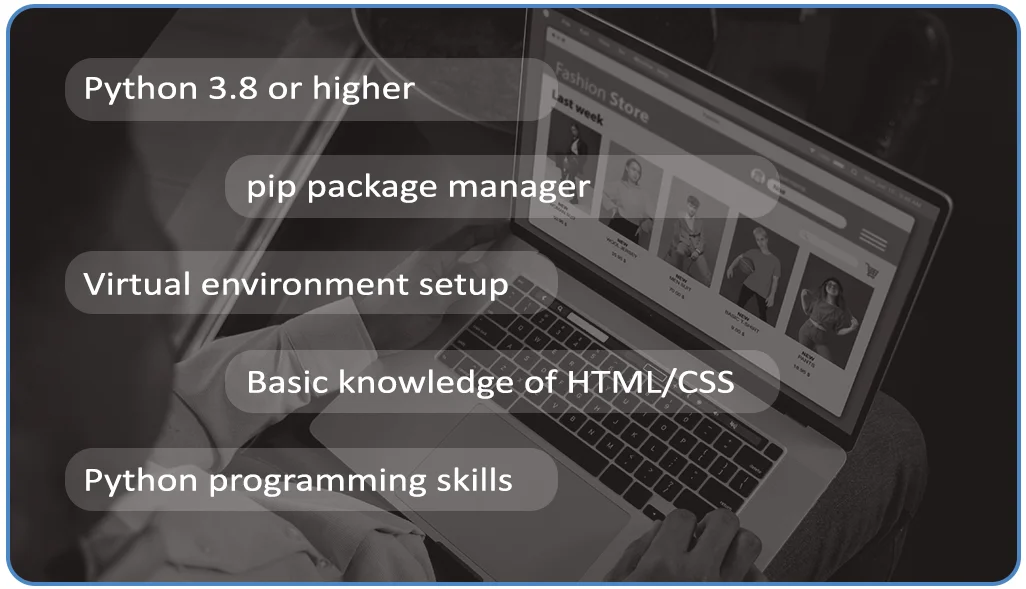

Essential Prerequisites for Developing a Web Crawler :

Before you begin with Automated Amazon Data Collection, it is crucial to ensure you have the necessary tools and foundational knowledge in place:

- Python 3.8 or higher : Ensure that your system has Python version 3.8 or later installed, as it includes necessary features and optimizations for web scraping.

- pip package manager : A crucial tool for installing and managing dependencies required for the scraper.

- Virtual environment setup : Helps isolate dependencies and maintain a clean development environment.

- Basic knowledge of HTML/CSS : Essential for navigating and extracting structured data from web pages.

- Python programming skills : Proficiency in Python is required to implement scraping logic, handle data extraction, and manage libraries efficiently.

You’ll be well-prepared to build an effective and responsible web crawler by fulfilling these prerequisites.

Step-by-Step Implementation of Amazon Web Crawler Python Script

This guide provides a structured approach to implementing Amazon Web Crawler Python Script, ensuring efficiency and accuracy. Follow these steps to develop a robust web scraping solution:

1. Environment Setup

First, ensure your system is configured correctly with the necessary tools and dependencies.

Follow these steps:

- Install Python : Ensure you have Python installed (preferably version 3.x). If not, download and install it from the official Python website.

- Set up a Virtual Environment : Creating a virtual environment to manage dependencies is recommended. Run:

import requests

from bs4 import BeautifulSoup

import pandas as pd

from time import sleep

import random

# Configure user agents for request rotation

USER_AGENTS = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64)',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7)'

]

2. Request Configuration

In this phase, the Request Configuration is set up to ensure efficient and reliable data retrieval from Amazon's website. This step involves defining the necessary headers and user-agent strings and handling request timeouts to avoid detection and blocking.

Key aspects of Request Configuration include:

- Setting Up Headers : Custom headers, including User-Agent, Accept-Language, and Referer, help mimic real-user behavior.

- Handling Request Timeouts : Implementing timeouts prevents the script from hanging indefinitely.

- Rotating User Agents & Proxies : Using multiple user agent strings and proxy servers enhances anonymity and reduces the risk of being blocked.

- Managing Retry Mechanism : Implementing a retry strategy ensures the script can handle temporary failures, such as connection timeouts or CAPTCHA challenges.

By carefully configuring requests, the Amazon Web Crawler Python Script can operate more efficiently while reducing the risk of detection.

def create_amazon_request(url):

headers = {

'User-Agent': random.choice(USER_AGENTS),

'Accept-Language': 'en-US,en;q=0.9'

}

try:

response = requests.get(url, headers=headers)

response.raise_for_status()

return response

except requests.RequestException as e:

print(f"Request Error: {e}")

return None

3. Data Extraction Logic

In this phase, the script processes the fetched web pages to extract relevant data. Python Web Scraping Tools like BeautifulSoup or Scrapy parse the HTML structure, identify key elements, and retrieve product details, including name, price, ratings, and reviews. The extracted data is then structured for further processing and storage.

def extract_product_details(soup):

product_data = {

'name': soup.find('span', {'id': 'productTitle'}).text.strip(),

'price': soup.find('span', {'class': 'a-price-whole'}).text.strip(),

'rating': soup.find('span', {'class': 'a-icon-alt'}).text.split()[0]

}

return product_data

Complete Python Web Crawler Code Sample

def scrape_amazon_products(search_query):

base_url = f"https://www.amazon.com/s?k={search_query.replace(' ', '+')}"

products = []

try:

response = requests.get(base_url, headers=headers)

soup = BeautifulSoup(response.content, 'html.parser')

# Extraction logic here

return products

except Exception as e:

print(f"Scraping error: {e}")

return []

Advanced Techniques for Automated Amazon Data Collection

Implementing advanced techniques is crucial to efficiently extracting data from Amazon while maintaining accuracy and reliability. Below are key strategies to enhance automated data collection:

- Proxy Rotation : Implementing a Proxy Rotation strategy helps prevent IP blocking by dynamically changing IP addresses, ensuring uninterrupted data extraction.

- Asynchronous Scraping : Leveraging Asynchronous Scraping significantly improves performance by handling multiple requests simultaneously, reducing response time, and increasing efficiency.

- Data Validation : Incorporating Data Validation mechanisms ensures data integrity by cross-checking extracted information, filtering out inaccuracies, and maintaining high-quality datasets.

- Error Handling : A well-structured Error Handling approach enables robust exception management, allowing the system to recover gracefully from failures and minimizing disruptions during the scraping process.

By integrating these techniques, businesses can enhance their Amazon data collection processes' reliability, speed, and accuracy.

Monitoring and Maintenance

Implementing a proactive monitoring and maintenance strategy is essential to ensuring the effectiveness and longevity of your Amazon Product Data Scraping process.

Regularly update your scraping script to address the following key factors:

- Website Structure Changes : Amazon frequently updates its website layout and code structure. Adjust your script accordingly to maintain seamless data extraction.

- New Anti-Scraping Technologies : Integrate advanced techniques such as rotating proxies, CAPTCHA-solving methods, and AI-based data extraction to stay ahead of evolving anti-scraping mechanisms.

- Performance Improvemen tsOptimize your script to enhance efficiency, speed, and accuracy, ensuring minimal downtime and maximum data retrieval success.

By consistently monitoring and refining your scraping solution, you can maintain reliable and high-quality data extraction while mitigating risks associated with website updates and security measures.

How Web Data Crawler Can Help You?

We offer a complete suite of Web Scraping Services designed to meet your unique business requirements.

Here’s how it adds value:

- Custom Scraper Development : Get tailored web scraping solutions built to extract the exact data you need from various online sources.

- Data Processing : Receive well-structured, clean, and ready-to-use datasets, ensuring accuracy and efficiency in your data-driven decision-making.

- Compliance Consulting : Stay ahead of legal complexities with expert guidance on ethical and regulatory aspects of web scraping.

- Continuous Support : Benefit from ongoing maintenance and performance optimization to keep your crawlers running smoothly.

- Advanced Analytics : Convert raw data into meaningful insights, enabling strategic business decisions backed by data-driven intelligence.

With our solution, you can effortlessly streamline data collection, enhance operational efficiency, and make informed business decisions.

Conclusion

Building a successful Amazon Web Crawler With Python In 2025 demands technical expertise, ethical considerations, and continuous adaptation. We are committed to optimizing your data collection strategies with cutting-edge solutions.

Ready to transform your Amazon Data Extraction process? Our experts can design a tailored solution to drive your business forward. Contact Web Data Crawler today and harness the power of intelligent web scraping!