How can you Scrape Product Prices From Zepto, Blinkit, And Jiomart for more innovative pricing?

Mar 09

Introduction

In today’s fast-paced e-commerce environment, access to precise and up-to-date pricing data is critical for businesses striving to maintain a competitive edge. For retailers, distributors, and market analysts within the Indian grocery delivery sector, comprehending how prices fluctuate across key platforms such as Zepto, Blinkit, and Jiomart offers invaluable insights into pricing strategies, inventory management, and competitive positioning.

Scraping product prices from Zepto, Blinkit, and Jiomart has become more advanced, enabling businesses to collect detailed data without manual effort. This blog explores the techniques, tools, and best practices for extracting pricing information from these widely used quick commerce platforms, helping you make data-driven decisions that can positively impact your profitability.

Whether you are a retailer aiming to refine your pricing model, a market researcher tracking trends, or a developer creating a price comparison tool, learning how to efficiently Scrape Product Prices From Zepto, Blinkit, And Jiomart will arm you with the critical data needed to stay ahead in the fast-moving online grocery market.

Understanding the Value of Price Scraping for Online Groceries

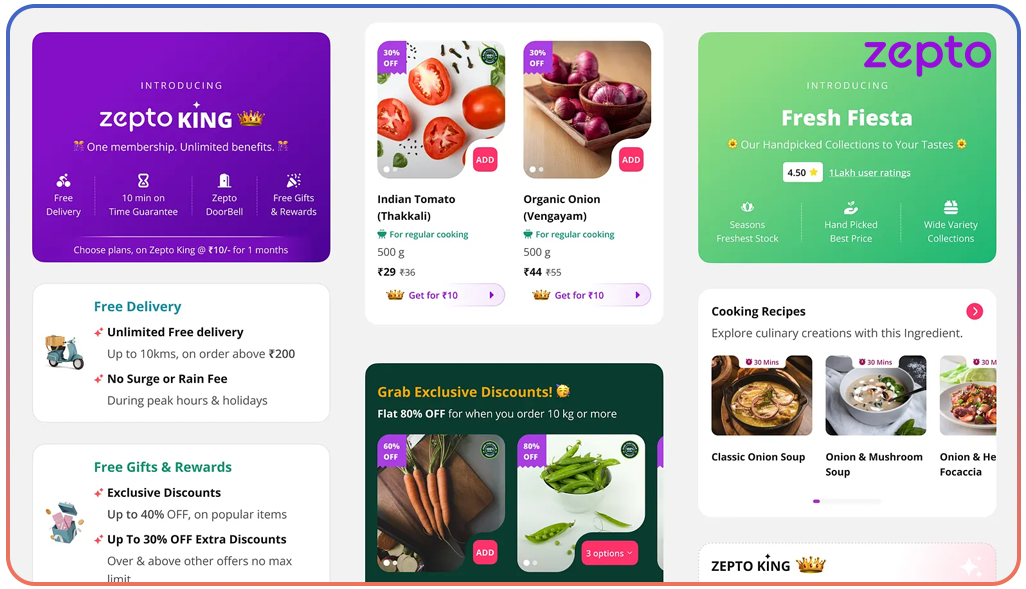

Before delving into the technical details of web scraping, it's essential to grasp the strategic value behind Real-Time Price Comparison For Zepto, Blinkit, and Jiomart. The quick-commerce grocery sector in India has experienced rapid growth, with these three platforms emerging as dominant players. Their dynamic pricing strategies lead to frequent price fluctuations influenced by time of day, inventory levels, competitor prices, and seasonal demand.

By leveraging effective Online Grocery Price Scraping Services, businesses can unlock a wide range of advantages:

- Track competitor pricing in real-time: Monitor how Zepto, Blinkit, and Jiomart adjust their prices throughout the day and week, enabling businesses to stay competitive.

- Identify pricing patterns: Uncover trends, such as when specific platforms offer discounts or increase prices for particular categories, providing valuable timing insights.

- Optimize your pricing strategy: Adjust your pricing in response to competitor behavior, ensuring your prices remain competitive while maximizing both sales and profit margins.

- Enhance inventory management: Monitor shifts in product prices to identify popularity trends, allowing businesses to make informed decisions about stock levels and demand forecasting.

- Improve customer offerings: Use detailed pricing insights to create targeted promotions or bundled offers, improving customer satisfaction and engagement.

These insights are invaluable in a market where consumers are highly price-sensitive, and even minor price changes can significantly impact customer loyalty.

Comparison of Major Quick Commerce Platforms in India

A detailed comparison of India's key quick commerce platforms, highlighting their delivery times, inventory models, price update frequency, and unique features.

Legal and Ethical Considerations

Before deploying real-time grocery price tracking through web scraping, it’s crucial to recognize its legal and ethical responsibilities.

Here are key factors to keep in mind:

Terms of Service Compliance

Most e-commerce platforms have Terms of Service that specifically address data scraping. Before extracting pricing data from platforms like Zepto, Blinkit, and Jiomart for dynamic pricing purposes, it is essential to thoroughly review each platform's Terms of Service to ensure full compliance with their policies.

Data Use Limitations

The data gathered via web scraping should be used exclusively for analysis and insight. It should not be used to replicate or misrepresent the offerings of the original platforms, as this could result in legal or reputational risks.

Responsible Scraping Practices

To minimize the impact on the target websites and ensure ethical scraping, implement the following responsible practices:

- Adhere to robots.txt directives.

- Introduce delays between requests to avoid overloading the site.

- Use appropriate user agents to identify the nature of your scraping activity.

- Limit the number of concurrent requests to maintain site stability.

Privacy Considerations

If any personal data is inadvertently collected during the scraping process, it must be immediately deleted and not stored or processed further. Businesses should take proactive steps to protect user privacy and ensure compliance with data protection regulations.

By adhering to these guidelines, businesses can effectively implement Price Comparison Scraping For Online Grocery Stores In India, ensuring they fully comply with legal and ethical standards.

Technical Prerequisites for Web Scraping

To effectively implement a web scraping solution for online grocery platforms, the following technical prerequisites are essential:

Programming Knowledge

A strong understanding of programming languages, particularly Python, is crucial. Python provides a rich ecosystem of libraries such as BeautifulSoup, Scrapy, and Selenium, which easily facilitate data extraction from websites.

Understanding of HTML/CSS

A fundamental grasp of how web pages are structured using HTML and CSS is necessary. This knowledge allows you to accurately locate and target specific elements on a page, such as price tags, product names, and other relevant data points.

API Knowledge

Some grocery platforms offer APIs that provide direct access to data. Although APIs can be a convenient alternative to scraping, they often come with limitations, such as restricted data or access caps, so understanding their use and limitations can be beneficial.

Proxy Management

To execute large-scale scraping efficiently, it’s essential to employ proxy management techniques. This involves rotating IP addresses to prevent your scraper from being blocked or flagged for excessive requests. Without proper proxy management, scraping efforts may be hindered due to rate-limiting or IP bans.

Data Storage

After gathering the pricing data, a solid database system is necessary to store and manage the information. Depending on the data's structure and volume, relational databases (e.g., MySQL or PostgreSQL) or NoSQL solutions (e.g., MongoDB) are commonly used for this purpose.

Tools and Libraries for Web Scraping

When it comes to Extracting Zepto, Blinkit, and Jiomart prices for effective dynamic pricing strategies, various tools and libraries can streamline the process:

Python Libraries

Python offers a vast ecosystem of libraries that can aid in scraping. Some of the most popular ones include:

- BeautifulSoup: A powerful library for parsing HTML and extracting data from static web pages, enabling seamless data extraction.

- Scrapy: A robust and scalable framework for large-scale web scraping projects, ideal for handling complex websites.

- Selenium: Perfect for scraping dynamic websites that rely on JavaScript to load content, making it suitable for modern web applications

- Requests: A straightforward HTTP library that simplifies making web requests and retrieving data from websites.

- Pandas: After data extraction, Pandas help organize, clean, and analyze scraped data.

Commercial Tools

Commercial scraping tools provide an intuitive alternative to programming for those who prefer a more streamlined, no-code or low-code experience.

Some notable options include:

- Octoparse: A user-friendly, no-code tool that makes web scraping accessible to users with minimal programming experience.

- ParseHub: Known for its advanced features, ParseHub can scrape complex websites, including those with intricate structures.

- ScrapeStorm: An AI-powered scraping tool with an intuitive visual interface that enhances the ease of data collection and processing.

- Bright Data (formerly Luminati): A premium tool offering proxy solutions tailored for web scraping, ensuring anonymity and data integrity.

These tools and libraries are vital for businesses and individuals looking to gather pricing data from Zepto, Blinkit, and Jiomart, enabling them to implement effective dynamic pricing strategies.

Web Scraping Tools Comparison

When evaluating different web scraping tools, it’s crucial to consider key aspects such as coding requirements, learning curve, and suitability for specific projects. Here’s a detailed comparison of some popular web scraping tools based on these factors:

Step-by-Step Guide to Scrape Product Prices from Zepto

Zepto Product Price Scraping requires a thorough understanding of the platform's structure and the implementation of effective scraping techniques.

Here's a detailed guide to help you achieve this:

Analyze zepto's Website Structure

Start by inspecting the HTML structure of Zepto's product pages. To do this:

- Right-click on a product price and select "Inspect" or "Inspect Element."

- Identify the specific HTML elements that contain the price information.

- Look for consistent patterns in how products and prices are organized across the site.

Set Up Your Environment

Before you start scraping, ensure that your environment is set up correctly.

This includes:

- Choosing your programming language (such as Python or Node.js).

- Installing necessary libraries (like BeautifulSoup, Selenium, or Scrapy).

- A database can be set up to store the data collected from the website.

Create a Basic Scraper for Zepto

Develop a basic scraper with functionality to:

- Send HTTP requests to Zepto's product pages to retrieve the HTML content.

- Use an HTML parser to extract product information such as name, price, and other relevant details.

- Implement basic error handling to address potential issues (e.g., failed requests) and retry mechanisms.

- Store the scraped data in a structured format such as CSV or JSON or directly in a database.

Handle Dynamic Content

Since Zepto often loads product information dynamically using JavaScript, your scraper must account for this.

In such cases:

- Use a browser automation tool like Selenium or Puppeteer that can execute JavaScript and wait for the content to load fully before attempting to scrape the data.

Implement Proxy Rotation

For large-scale Extract Real-Time Product Prices From Zepto, it's crucial to implement proxy rotation to avoid IP bans and rate limiting.

This can be achieved by:

- Using multiple IP addresses through a proxy service or maintaining your proxy network.

- Regularly rotating these proxies to distribute the requests and maintain anonymity while scraping real-time product prices from Zepto.

This step-by-step guide should help create an efficient and reliable scraping process for Zepto's product prices.

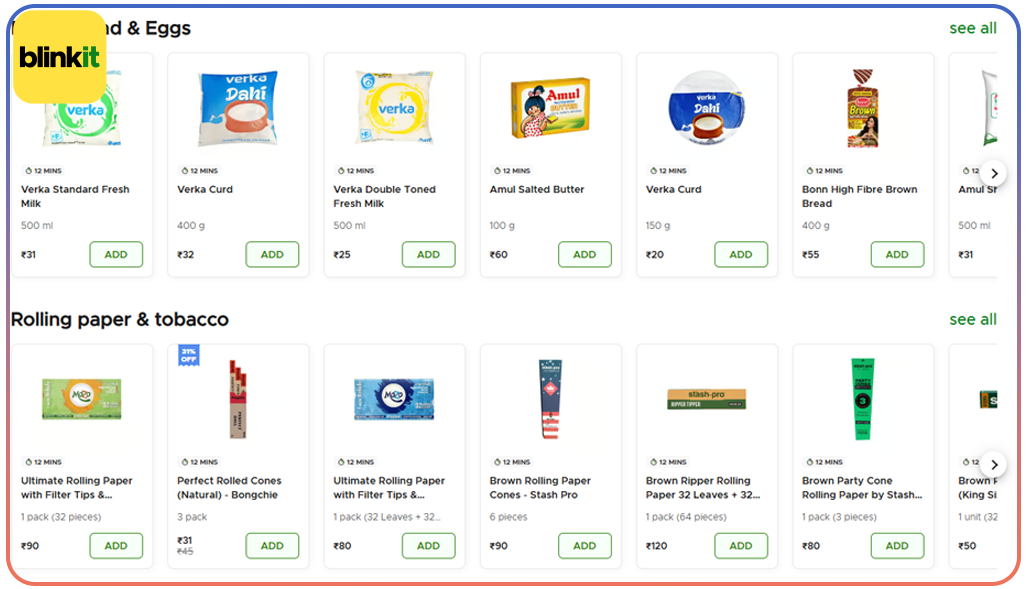

Extracting Price Data from Blinkit

Blinkit Price Extraction Services follow similar principles to other platforms but require specific adjustments tailored to Blinkit’s unique website structure.

Here's a detailed approach to ensure accurate data scraping:

Understand Blinkit’s Structure

Blinkit has a distinct website layout compared to platforms like Zepto. The product data is often organized differently, so it's essential to focus on key areas such as:

- Category Pages vs. Product Detail Pages: Recognize the structural differences between the listing and individual product pages.

- Variants and Sizes: Attention is paid to how product variants (such as different sizes or colors) are displayed.

- Unique Price Elements: Ensure that regular and discounted prices are captured, especially for products on sale.

Create a Blinkit-Specific Scraper

Your scraper needs to be customized to handle Blinkit’s unique HTML structure and how its data is organized.

Key considerations include:

- Handling Multiple Variants: Develop logic to deal with products that come in various options.

- Discounted Products: Account for products that show the original price and sale price.

- Quantity-Based Pricing: Ensure that the scraper can capture products that vary in price based on quantity.

- Regional Pricing Variations: Consider how regional pricing might differ, ensuring data is extracted accurately for different locations.

Handle Pagination for Complete Data

Blinkit Grocery Price Data Scraping often spans multiple pages within product categories, so handling pagination effectively is crucial. Implement logic to navigate the various pages, ensuring every product across all categories is scraped for a comprehensive dataset. This step is essential to capture all available product information.

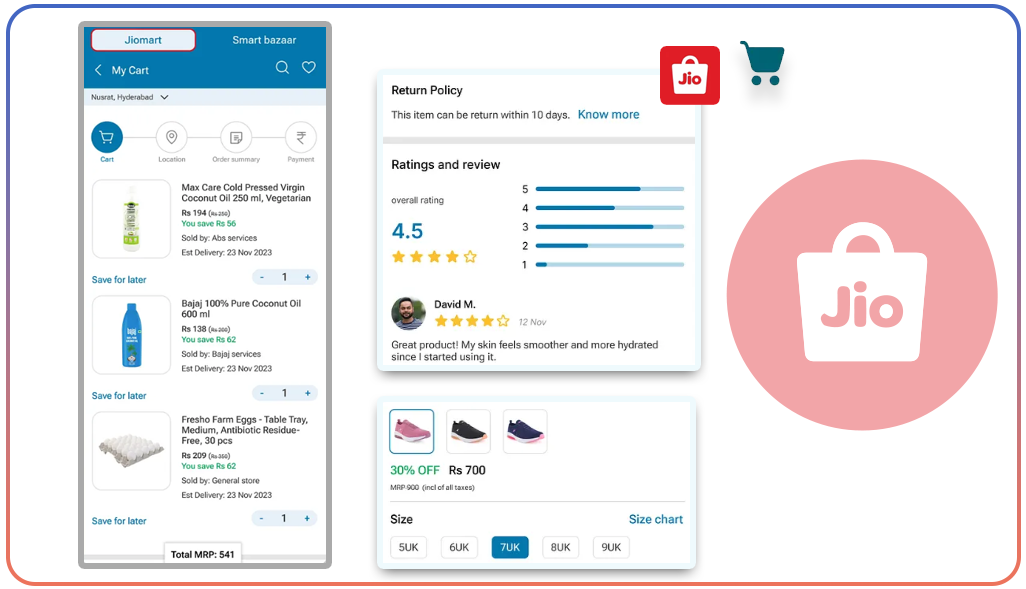

Implementing Jiomart Web Scraping

Effective Jiomart Web Scraping For Prices extraction requires a deep understanding of the platform's unique structure and challenges. Here's a breakdown of the key steps to ensure successful scraping:

Analyze Jiomart's Website Architecture

Jiomart has a more intricate structure with specific features that need attention.

- Regional price variations where prices vary depending on the delivery region.

- Multiple product views (grid/list) as the site offers different ways to display product listings, each requiring distinct handling.

- Advanced filtering options are available on the platform that can affect the data you need to scrape.

Create a Jiomart Scraper

Developing an effective Jiomart scraper involves addressing the platform's distinctive features and challenges.

Key aspects to consider include:

- As handling session cookies, session management is crucial for maintaining an active connection throughout the scraping process.

- Location-based pricing, such as scraping, must factor in the prices presented depending on the delivery location.

- Advanced filtering options are available on the platform that can affect the data you need to scrape.

Handle Location-Based Pricing

Jiomart displays different prices based on the customer's delivery location, so your scraper must account for this variation.

Here’s how to manage it:

- Set and maintain the desired delivery location using cookies or session variables to set the delivery region and ensure consistency throughout the scraping process.

- Understand the impact of location-based pricing to ensure your scraper can distinguish how location affects pricing and product availability.

- Collect pricing data from multiple locations so your scraper can gather pricing data across various delivery locations for thorough analysis.

How Web Data Crawler Can Help You?

We offer specialized Online Grocery Price Scraping Services designed to empower businesses by providing seamless access to valuable pricing data.

Our expertise and cutting-edge solutions ensure that your business benefits from:

- Automated Data Extraction: We use advanced scraping techniques to Extract Zepto, Blinkit, And Jiomart Prices For Dynamic Pricing Strategies.

- Real-Time Monitoring: Our comprehensive Online Grocery Price Monitoring Solutions let you stay informed about real-time price changes across various platforms, giving you a competitive edge in the marketplace.

- Custom Solutions: We provide tailored web scraping solutions designed to meet your business's unique requirements, ensuring precision and relevance in the data you receive.

- Secure and Compliant Methods: We prioritize ethical scraping practices to guarantee that all data collection methods adhere to platform policies and industry standards, ensuring security and compliance.

With these core offerings, we help businesses leverage crucial market insights and streamline their pricing strategies.

Conclusion

Access to accurate and timely pricing data is crucial for success in the competitive online grocery market. Businesses can uncover valuable insights into market trends, competitor strategies, and consumer behavior by implementing effective strategies to Scrape Product Prices From Zepto, Blinkit, And Jiomart.

Whether developing in-house scraping capabilities or partnering with a specialized provider like us, the key is leveraging this data strategically for informed pricing decisions, inventory management, and overall business strategy.

Contact Web Data Crawler today to discover how our customized data extraction solutions can help your business stay ahead in the fast-paced online grocery industry. Our expert team is ready to assist in transforming raw data into actionable insights that drive growth and profitability.